Radio Station Mobile Apps – My Experience

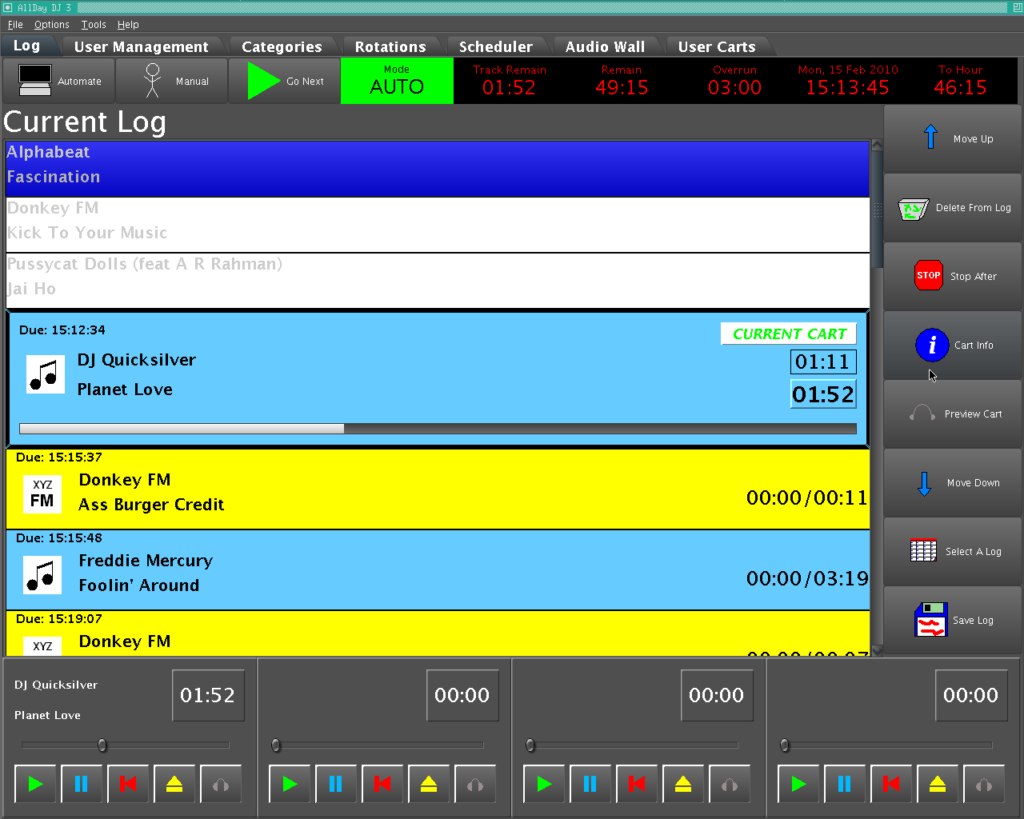

It’s funny, I speak to developers and occasionally (jokingly!) get brushed off as an unknowing infrastructure support type. That’s despite writing code for over 20 years, a Computer Science degree that imprinted the “Gang of Four” book on me and a bit of “under the radar” experience writing web, desktop and mobile apps. Like many a (ex-)Broadcast Engineer, I’ve written a playout system or two as well.

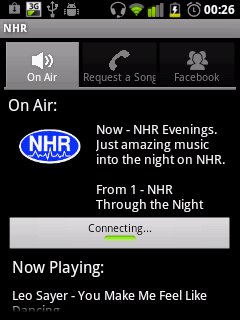

One of those areas I’ve gained a bit of experience in over the years is mobile app development for small radio stations. The first app I ever made, I wrote three time in the end. Once for Android in Java, again for iOS in ObjectiveC and again in Java for Blackberry!

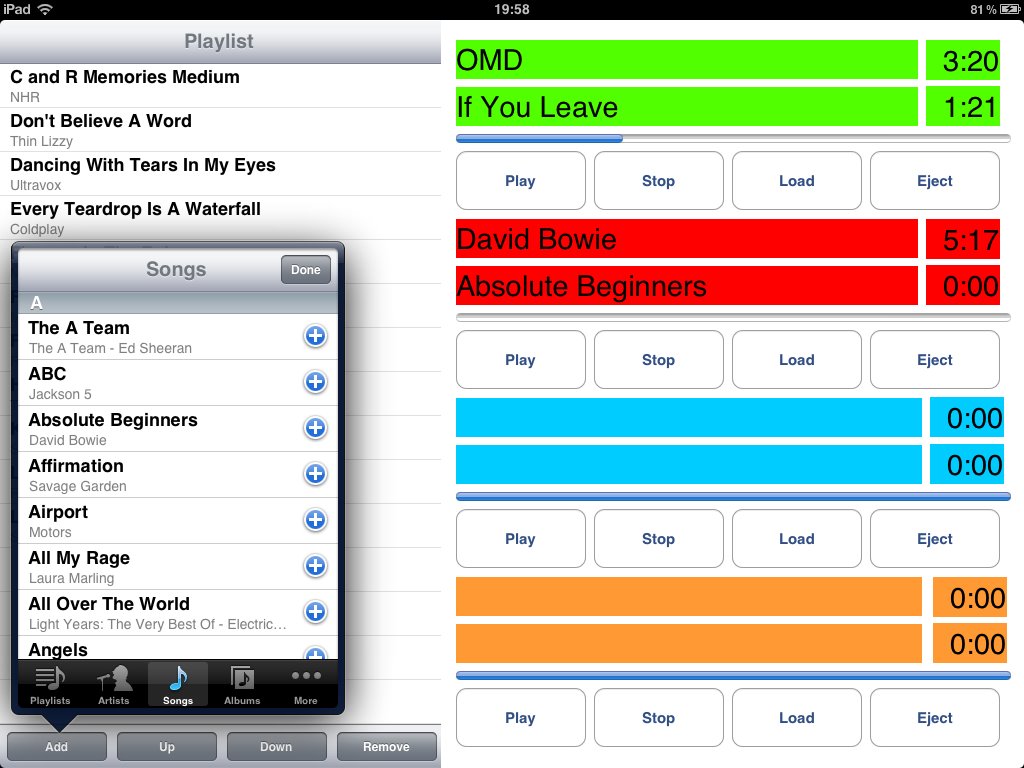

There’s also that time I wrote an OB playout system for iOS and promptly lost the source code for:

None of these early attempts were pretty but they functioned well. An unfortunate downside was that every platform needed its own native application written. That’s a lot of duplicated effort to present the same features and information across platforms.

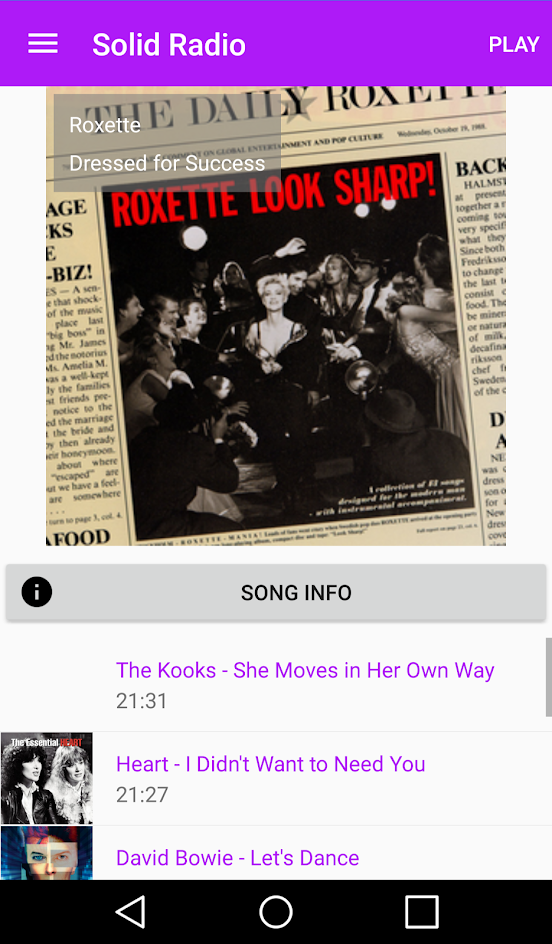

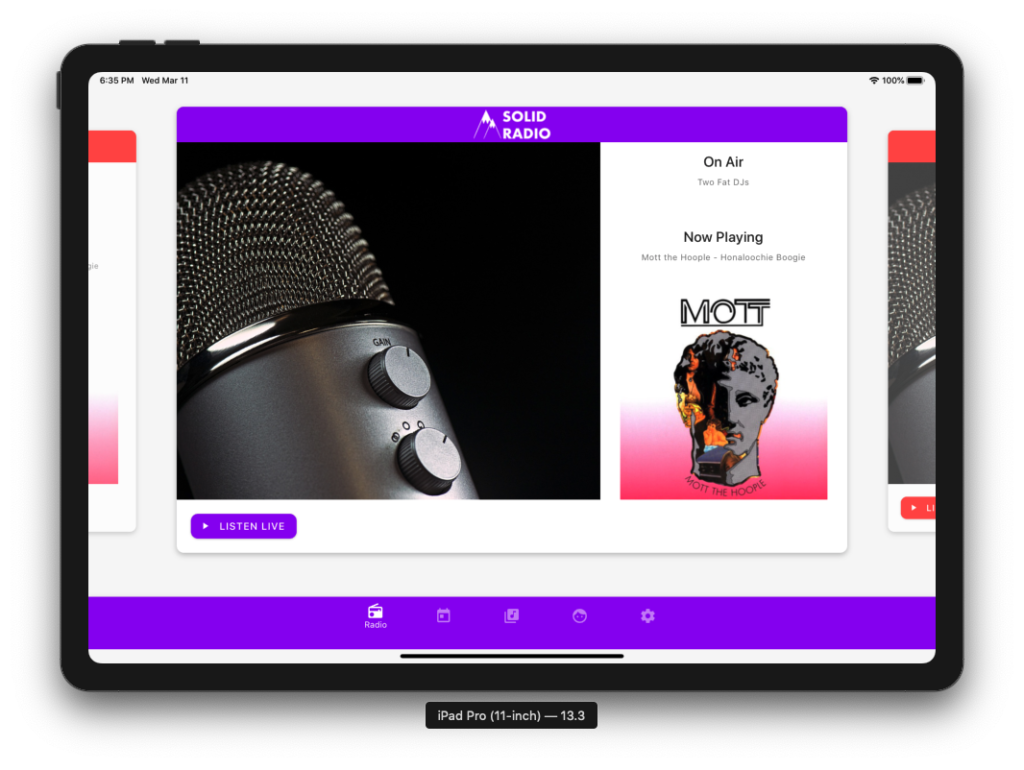

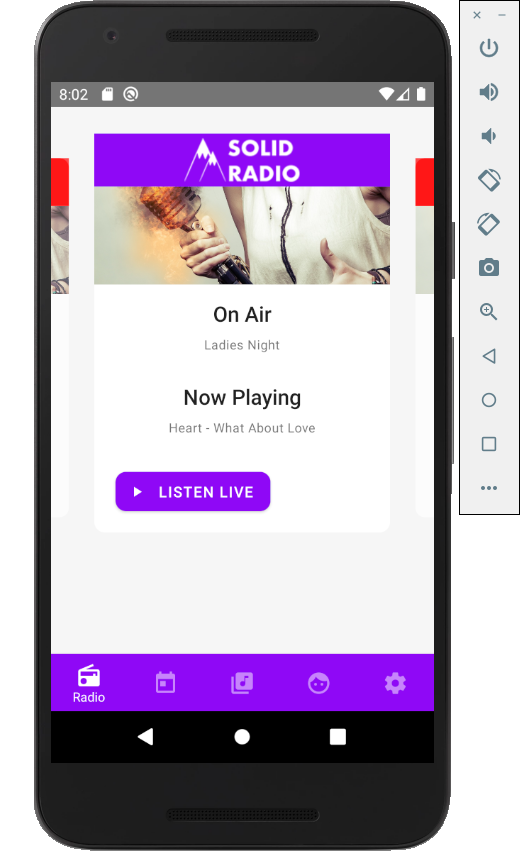

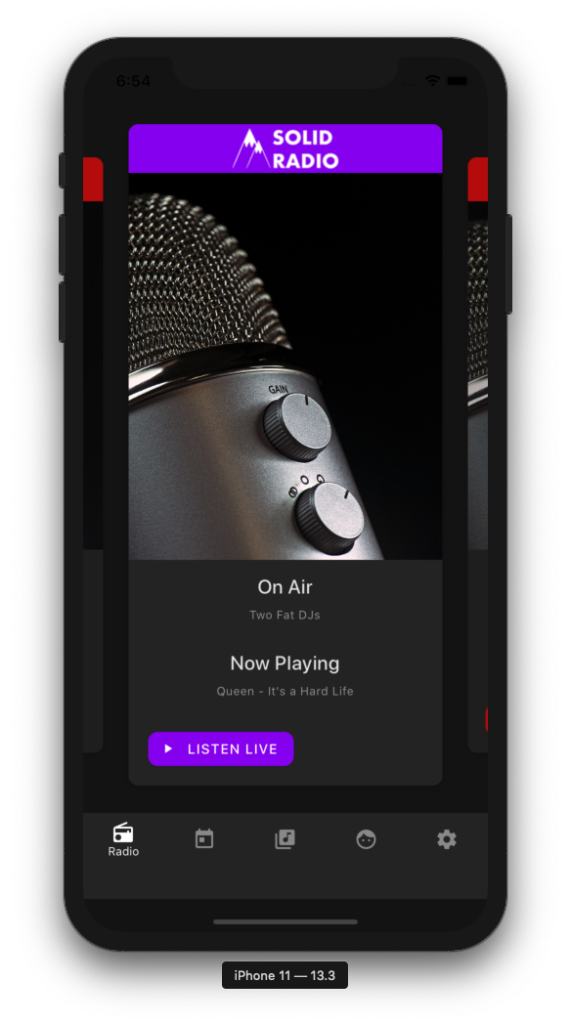

When it came to writing the current Solid Radio mobile app, I looked for something that could allow me to write once and compile down to the different platforms. The app (along with the later Alexa skill) would need to talk to a new Django/DRF powered API replacement for MusicStats.

With that, I landed on Xamarin. C# was a familiar beast having encountered it writing AllDay DJ 2 (v3 was Java, v1 was VB6 for my Advanced Higher Computing qualification) and maintaining the ASP.NET powered API / single page application for a previous employer.

While I ended up with a functional result that looks similar on both platforms, it came with caveats. The first of these relates to playing audio. The code for playing an AAC/MP3 stream is different for each platform and while written in C# is a very light shim over the Android or ObjectiveC APIs.

Android playback also ran into problems with the API on devices lying about AAC+ support. By default, we want listeners to pull the AAC+ stream and fall back to the equivalent MP3 stream at the same bitrate. When we got the wrong information about support, the client would attempt to play and error out eventually.

For that reason, I had to add logic to spot this behaviour and tweak the user setting across to MP3. If you’ve got an Android device and the first attempt at streaming was slow, check this setting. It might have had to fail over.

I also ran into issues Bluetooth and notifications. Different approaches were needed for each platform with my own abstraction added through Dependancy Service APIs.

TLS 1.2 also became a problem. The CloudFlare WAF service that fronts the API turned it on by default and inadvertently broke the websockets used to provide the now playing information. It’s not a CloudFlare problem and only impacts physical devices on Android.

Turns out that despite the correct HttpClient and SSL/TLS stack options being selected, the library used to make the websocket connections fails with TLS problems. This can’t be replicated in the simulator and is the root cause for why now playing doesn’t work on Android right now.

Fixing it is a huge piece of work. The underlying app needs to be migrated to .NET core 2 or 3. Doing this unfortunately means re-engineering chunks of the UI code. It’s to the point where a re-write from scratch would be quicker.

This doesn’t even take into account the works needed to bring CarPlay or Android Auto to the app. Nor any of the shiny new podcast features we want to deliver.

That makes now a good time to look at alternatives. After a bit of searching, include re-starting on Xamarin with F#, I ended up on React Native. Yup, JavaScript/TypeScript – a beast I’ve known since the Netscape days and worked recently with in Angular.

While it’s still early days, I’ve been enjoying it so far. Websockets are supported out of the box. Tied into a Redux Saga channel, handling messages and errors is relatively simple.

The whole Redux and Sagas thing took a moment to understand but I’m drinking the Kool Aid now. A single immutable state I can log out to a development console has made writing what we’ve got so far a breeze. As examples, it’s allowed dark mode toggles and now playing updates to hit multiple locations simultaneously.

The development cycle is also much shorter. With simulators on the development workstation, I’m not waiting for a full re-compile/package/deploy each time there’s a code change. There are times it needs to occur such as adding Firebase libraries for logging/monitoring/analytics but the more instantanious results really help with delivering results.