The Frustrating World of Writing Radio Station Skills for Alexa

Writing a radio station skill for Alexa should be relatively simple. The minimal viable product is streaming audio plays when the user asks for it and maybe we can give some now playing information in response to a convoluted command. You know, something like “Alexa, tell Solid Radio now playing”.

That’s really simple to achieve. If you’re using the version 2 SDK and running it in AWS Lambda, the core logic looks something like this:

var metadata = {

"title": station.name,

"subtitle": station.slogan,

"art": {

"sources": [

{

"contentDescription": station.name,

"url": station.logo_square,

}

]

}

};

handlerInput.responseBuilder

.addAudioPlayerPlayDirective('REPLACE_ALL', station.stream_aac_high, Settings.metadata_token, 0, null, metadata);In our example, the station object is obtained from a REST API call to our shared mobile app, Alexa, etc. backend. It provides things like now playing and EPG data as well.

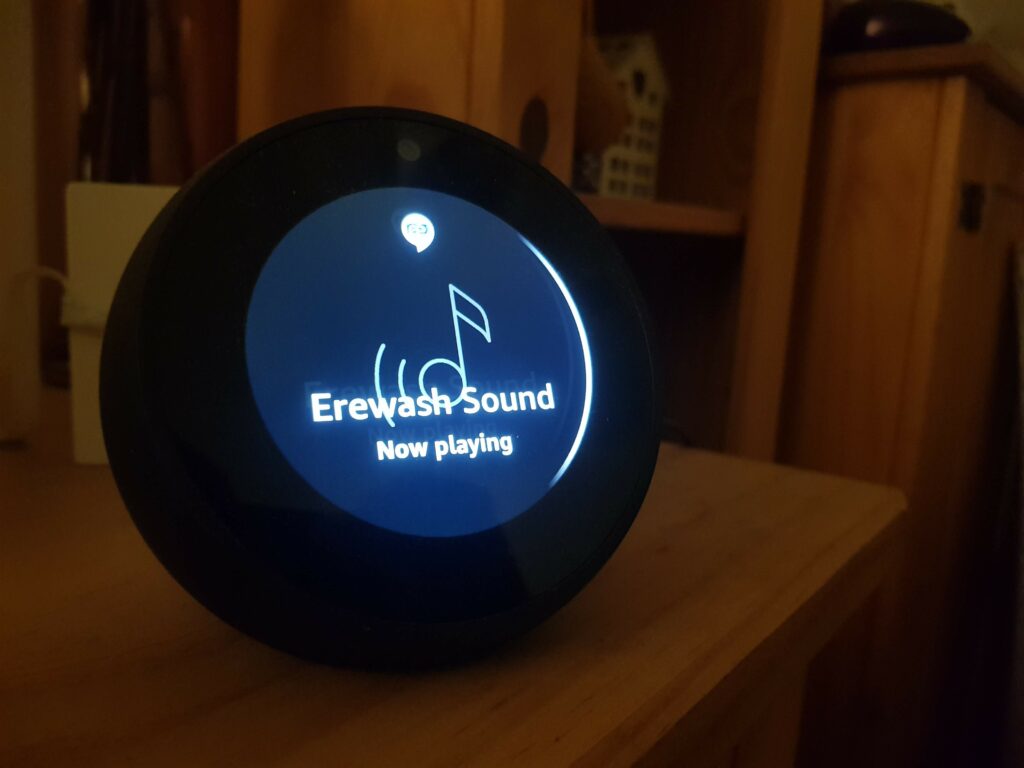

Assuming we supply a station name, unique metadata token (to defeat caching) and logo, we now get an image and station name on our Echo Spot when we action the request.

That’s not bad. We get a logo, strapline and it looks a bit neater than the out of the box defaults:

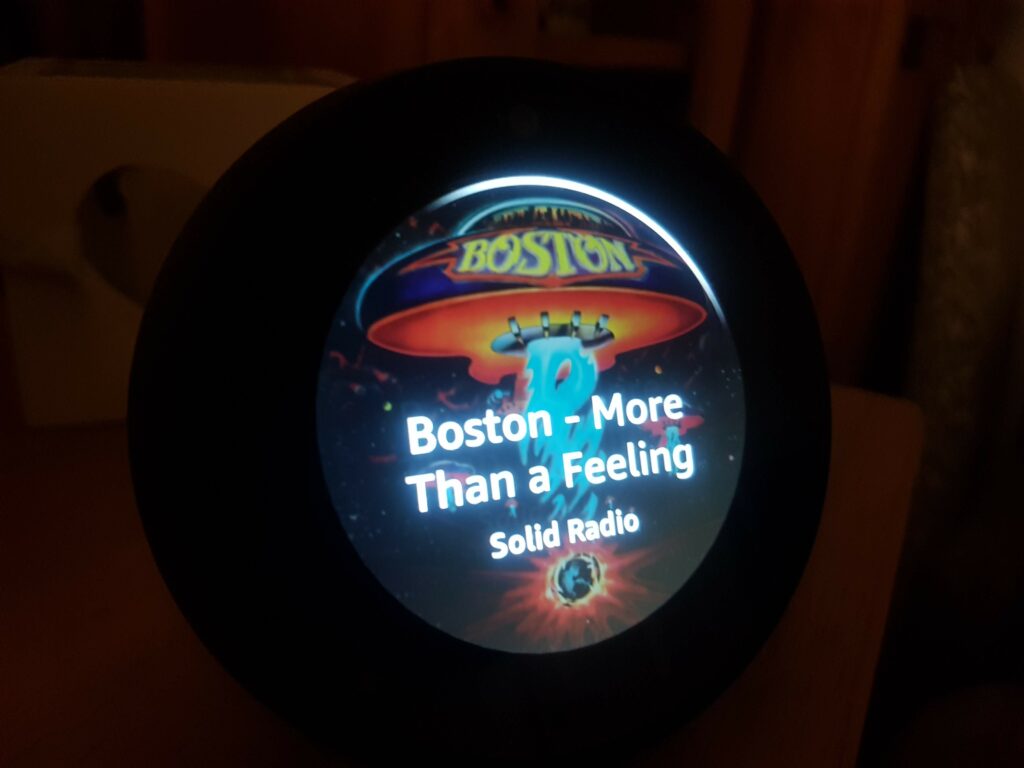

What happens if we send current song metadata instead? You know, album art, artist, title, etc.

Looks neat. Except we’ve got a real problem now. You can’t dynamically update that metdata. The Echo can play a stream no problem so long as it’s HTTPS and one of the supported codecs. There is no mechanic to trigger or send an update. Especially using mechanics such as websockets, the tool we use to keep the mobile app in sync.

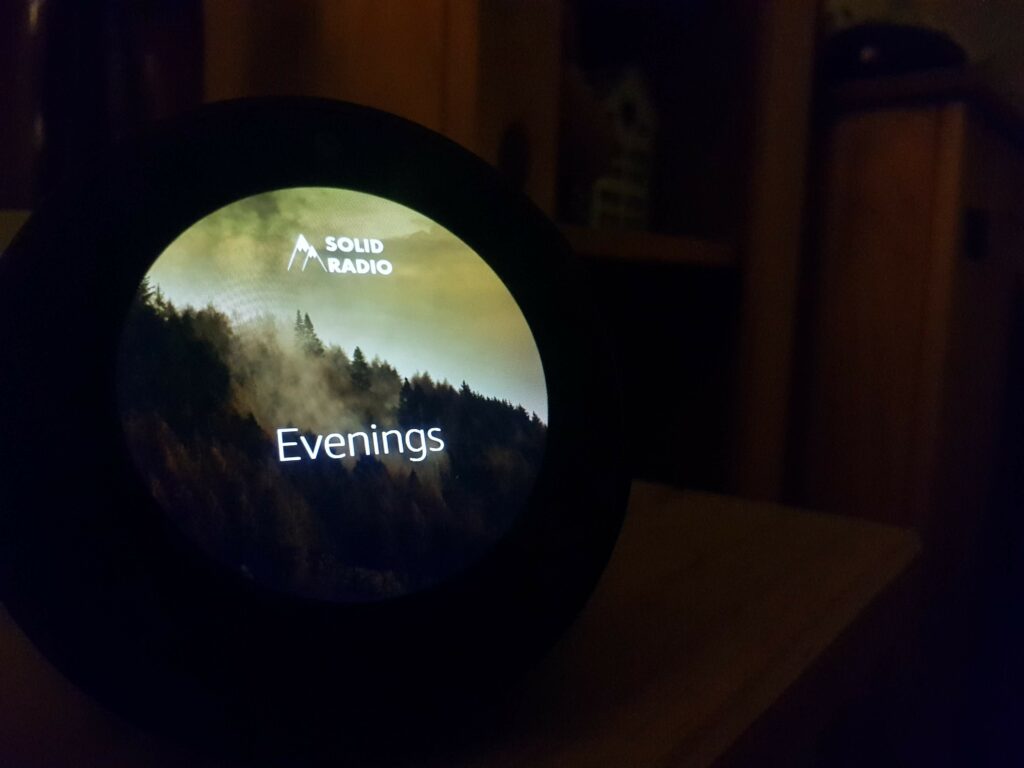

The SDK does have a different approach we can try – the Alexa Presentation Language. It allows you to build JSON defined documents that can consist of multiple pages, rotate on a carousel and trigger events on a delay (potentially refreshing content). Sounds exactly what we’re looking for and looks something like this when implemented:

It seems the ideal solution to our problem. We send both the GUI and audio streaming commands to our Echo Spot. The screen displays but the audio doesn’t play. Well, it does play if the screen times out. There’s no way to make it work and in fact Amazon explicitly calls it out as not allowed.

The same applies though isn’t documented for the older render template method. It allows you to display content but only with one of a few set templates. You still can’t run things in parallel.

Considering Amazon is keen for you as a developer to make use of the screen, it’s a shame we can’t do it sensibly with streaming audio. Though there must a way of doing it as TuneIn seem to have a way of overlaying on top of the built-in streaming audio UI.