What’s the story on webRTC?

Ask some people, and they’ll claim webRTC isn’t making great inroads. In fact, a podcast I heard recently triggered me to actually write this article. After all, it’s a technology that’s helping change a lot in the broadcast radio world. Even if it’s not setting the rest of the IT world on fire.

In case you’ve never heard of it, webRTC is an API that allows you to make real-time audio and video communications happen between browsers with just a couple of lines of JavaScript. It’s built into most of the major browsers and is (or at least was) the technology that makes sites like ipDTL and Source Connect work.

When it comes to the practical day-to-day stuff, I’ve seen it used for OBs and voiceovers. It can even work well over a 3G connection. Though that could also be said about a sizable SIP deployment I was heavily involved in. That project saw customized softphone software being deployed to mobile handsets, allowing for broadcast quality interviews with external and internal parties. What’s more, you may have heard it in use without even realizing it’s there.

So, why not use webRTC for that application? Unfortunately, mobile browser support for the technology was spotty at best (well, it was at the time). We also gained phone system integration with the SIP deployment. Oh, and it’s also used for studio to studio audio links as well.

But back on the topic of webRTC and what I can tell you about it. When it was a relatively new technology I ended up developing a little in-house test application to see what it could do. While we were able to make good quality calls with the Opus codec webRTC uses, there were a couple of catches.

Firstly, webRTC makes it incredibly easy to make calls between broswers. The difficult bit is in brokering said calls. There is no built-in mechanism for endpoints to find each other. You need to write your own server that can pass the Session Description Protocol (SDP) messages between the users. It’s these messages that actually negotiate the call and codecs used.

It’s also this service that the well known webRTC providers for broadcast are supplying. Doing this reliably can be hard.

Another catch is that webRTC will try to negotiate a sensible bitrate between the two endpoints as part of the process. While that’s great for conferences and phone calls, it’s not so good on the broadcast side. It’ll also generally go for an average bitrate rather than constant bitrate.

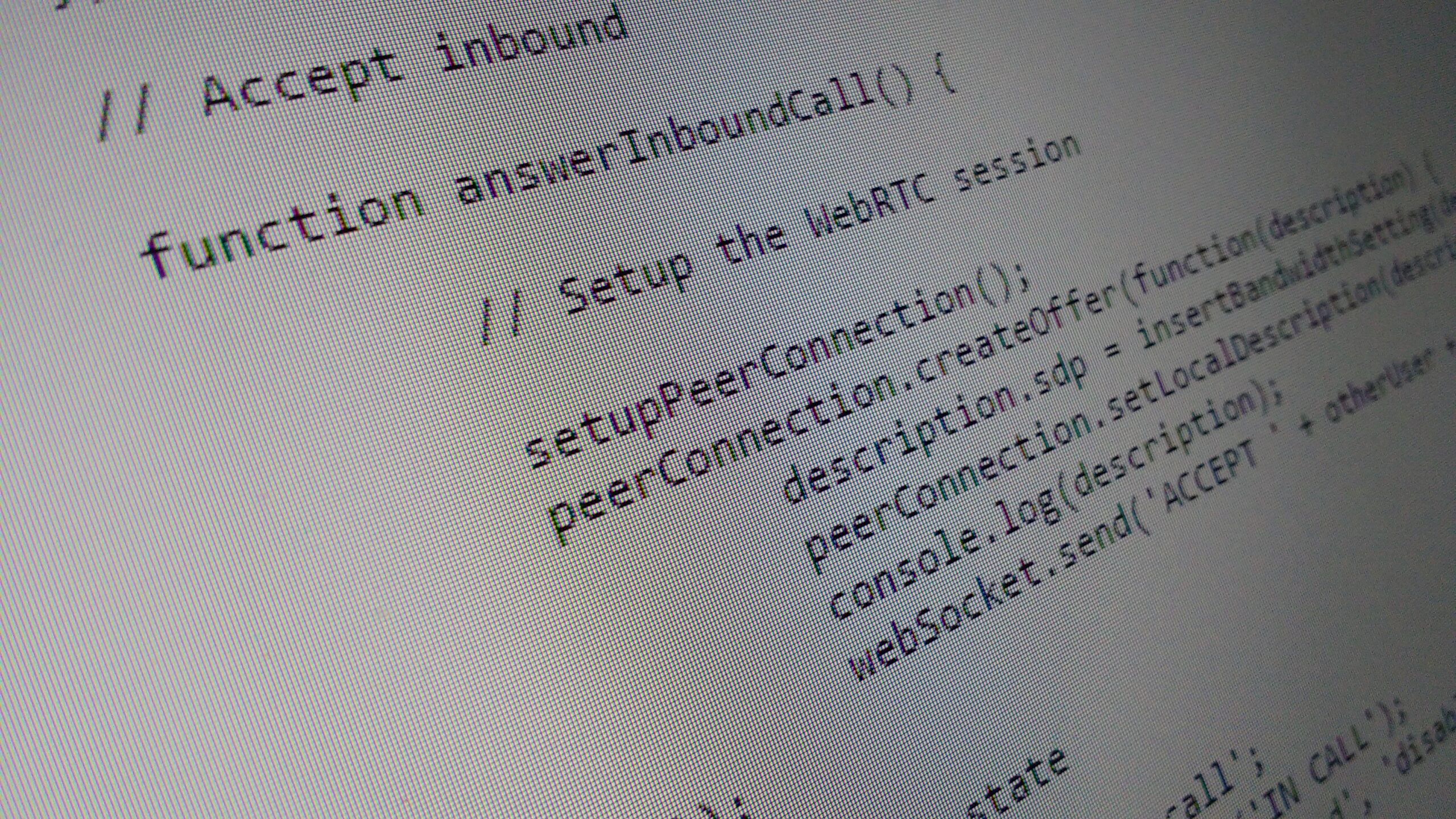

To fix this, you’ll need to edit the SDP messages sent as shown below:

// Bandwidth setting

function insertBandwidthSetting(sdp) {

// Figure out what bitrate and channel count we're using

var selectedBitrate = $('#bitrate').val();

var stereo = selectedBitrate > 64 ? 1 : 0;

// Insert bandwidth setting

var sdpLines = sdp.split('\r\n');

var newSdp = '';

for (var i=0; i < sdpLines.length; i++) {

// Update the bandwidth line

if (sdpLines[i].indexOf('a=fmtp') == 0) {

sdpLines[i] = sdpLines[i].substring(0, sdpLines[i].indexOf(' '));

sdpLines[i] = sdpLines[i] + ' cbr=1; stereo=' + stereo + '; maxaveragebitrate=' + (1000 * selectedBitrate);

}

// Slap the next line in

newSdp = newSdp + sdpLines[i] + '\r\n';

// Allow up to 65535kbps. Basically a silly value.

if (sdpLines[i].indexOf('a=mid') == 0) {

newSdp = newSdp + 'b=65535\r\n';

}

}

return newSdp.substring(0, newSdp.length-2);

}

In our example, we pull the bitrate from a user control. We then do a simple check on it - less than 64kbps we force mono, more than 64kbps we force stereo. With the two options ready to go, we cycle through every every line of the SDP message, looking for the bandwidth line.

When we find it, we force constant bitrate (cbr=1), set the stereo/mono flag and configure the average bitrate. This is our target bitrate in bps (thus the x1000 multiplication). We also set the "bitrate" to something silly. This is done so that the bitrate property doesn't override the codec setting to chose earlier.

Basically, it's a fair bit of work to get webRTC to behave like an ISDN codec would. This approach thankfully works in all webRTC supported browsers.

Talking of which, when I was originally doing this work, there was no single block of JavaScript that would work on both Firefox and Chrome. You needed to do a few checks in order to determine which API calls you needed to make.

Another point worth considering is STUN servers. In normal operation, webRTC attempts to build a direct connection between the two endpoints. This works quite well where there's no obstructions in the way. In fact, the system is clever enough to use internal IP addresses when both endpoints are hidden behind the same NAT appliance.

That said, there are times when firewalls will cause you problems. For these a STUN server is needed. Instead of making the direct connection between endpoints, both ends will make a direct, outbound connection to the STUN server. All audio traffic will pass through this point.

So, the point of all this is webRTC does have a useful place in broadcast. It's also possible for you to make use of the technology yourself, allowing for low-cost, high quality audio links using Chrome or Firefox (but not IE!). While it's never going to compete with a full SIP deployment, the fact it's being used by broadcsters of all sizes tells me there's a future in it. Especially if it helps kill off those ISDN lines with a mix of codecs that seems to confuse anyone that isn't technical.