SDN – Driving Cumulus/Mellanox (Nvidia) Networks from TFS

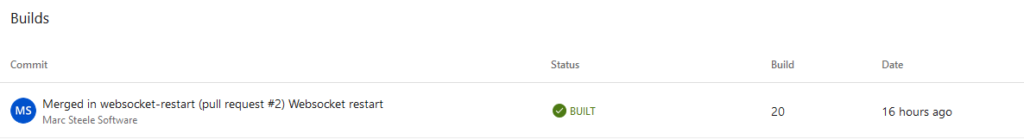

I’m not going to win any prizes for “catchiest” title but it’s something we’ve been beavering away with at work. A new datacentre network configured through a CI pipeline. At the moment, the initial trigger is manual commits of YAML files to git and merged through pull requests. Assuming my colleague assesses I’m reasonably sane that day, away off down the pipeline it goes.

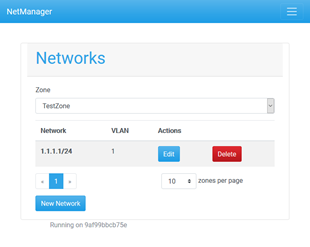

The eventual plan is a REST API with Angular front-end to enforce business rules and remove the manual part of making network changes. We can generate some of the YAML files right now but it’s a slow burner project.

As it stands today, configuration changes on this infrastructure go through a TFS deployment pipeline. The entire proces is git commit –> PR/merge –> testing –> deployment to production. As much as pipelines are a normal go-to in today’s software development methodology, making network infrastructure play nice has been a bit of a challenge.

If only it was as easy as building containers on the fly or using App Center to build and release mobile apps. The time and effort I’ve saved in personal projects has been a game changer!

The biggest issue to date has been around building a test environment. We Vagrant up a graph of Cumulus VMs connected in the same arrangement as the physical switches. While a range of VM images exist for Cumulus, there is no Hyper-V images. We’re a Windows shop that makes use of Hyper-V across the board meaning ESXi or Xen aren’t practical options.

The disk image does translate across from ESXi to Hyper-V but fails to find the NIC on boot. Turns out even the Tulip drivers have not been compiled into the kernel. Re-compiling a custom kernel or messing with drivers in provided images doesn’t seem a good move for a test enviroment.

Our workaround is VirtualBox nested inside Hyper-V. It means testing can be painfully slow but does work. We’ve stopped a number of faulty configurations from hitting the physical switches.

On the plus side, we run a PyTest integration test suite which generates a JUnit XML results file TFS can ingest. The upshot we get a complete test history to be juged on. Red is good, right?

Wall clock time is also an issue for the deployment / configuration step. We use Ansible to push the configuration onto the switches. After all, they are just Linux (ONIE) boxes running switchd, FRR and friends. We could gain some time back by not running the configuration for logging, authentication, hardening on every run but we’re reluctant to run changes in parallel. If something goes wrong, we’d rather only the one switch it failed on was affected.

Could our REST API directly generate the config files, restart services, etc.? Of course! Would it be a good plan? I’m not so convinced. It would be re-inventing the wheel and probably less reliably. Driving a configuration tool like Ansible directly makes more sense.

Now, before anyone asks, we did look at the REST APIs provided directly on the Cumulus switches. If they operated in a desired state style, they’d be the core of our approach. Unfortunately, the pretty much just provide a way of running CLI commands over HTTP. Not a bad plan for extracting data but feels a lot like the hackery I had to do with Aruba controllers to screen scrape goodput data out of v6 systems (if I remember correctly, the we front end was making requests along the lines of blah.php?command=set+foo+bar+baz – it’s been a few years).